Since early days of multitasking operating systems, fragmentation was an issue. Affecting read and write performance on traditional magnetic hard drives, fragmentation has its share of negative effects even on today’s solid-state media. How do we reduce fragmentation and should we fight it on SSD drives? Read along to find out!

Contents

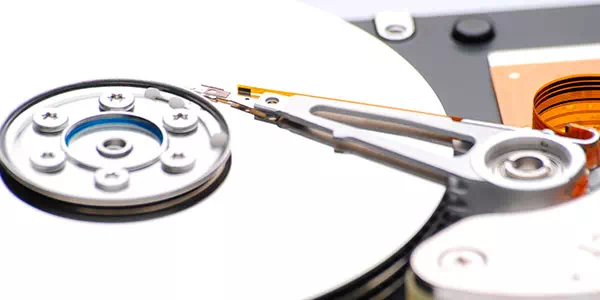

How Fragmentation Occurs?

Any file you’re about to write on that disk will be placed into the largest contiguous chunk of space. When you have a fresh hard drive with lots of empty space, the largest contiguous chunk is going to be larger than the file you’re going to save. Fast-forward a few days, weeks and months of saving files, deleting them and saving files again, and you no longer have that much free space on your hard drive. And even if you do, it’s probably scattered around the disk in the form of multiple smaller chunks of free space. Any file you’re about to write now will still be placed into the largest chunk of free space, only this time the file may be actually bigger than the largest free block available.

When this happens, the operating system will store parts of the file in different chunks regardless of their physical location on the disk. If you have a really large file and a really fragmented hard drive, the file might be broken into as many as several hundred fragments. When reading such a file, the system will have to access each chunk one after another. The hard drive will need to perform seek operations, moving magnetic heads to a new place and waiting for them to settle. This happens for each and every chunk, significantly slowing down file read speed. Instead of a single, contiguous read with the maximum transfer rate your hard drive is capable of (maybe 100-150 MB/s), you’ll be getting a much, much slower rate of around 10-30 MB/s (depending on how large and how fragmented the file is). Sounds bad enough? The worst part is still ahead.

Having a large number of highly fragmented files sitting on your hard drive not only reduces read and write performance, but it can also affect longevity of the hard drive itself. Constant movements of magnetic heads put additional wear on the drive’s mechanics, causing premature wear and reducing its effective lifespan.

If that’s not enough, we have the last bit of bad news for you. Fragmented files are much harder to recover if anything happens to your data. A tiny corruption in the file system, an accidentally deleted document or an exciting experiment with Ubuntu can easily make your data suddenly disappear.

If you followed our blog for some time, you may already know about context-aware recovery algorithms based on signature search. Context-aware recovery works by scanning the disk in low level in an attempt to detect files of known types by analyzing raw data and matching it against a database of known patterns. In other words, context-aware recovery reads the disk surface one sector after another, analyzing their content and trying to find out whether or not the sector contains what appears to be a file header.

After finding a file header, the algorithm will analyze it in order to determine the file’s length (this information is often contained in the header or can be otherwise determined by analyzing subsequent data). Now, knowing where the file starts and how long the file is, the content-aware algorithm can try to determine which sectors on the disk belong to that file.

There are several ways to do that. The dumb way is simply assuming that a certain number of sectors after the one that contains the file’s header belong to that file. This method is dumb because of, well, fragmentation. However, if there is no file system available, even the smartest data recovery algorithm will have to make use of the ‘dumb’ method, as more advanced techniques will not be available.

The smarter method checks the file system before making assumptions. If we have sectors 1, 2, 3 and 4, sector 1 is listed as “available” and a JPEG file header was detected with file length of exactly 2 sectors, which ones should we pick? The dumb algorithm will always pick sectors 1 and 2 regardless of anything else. The smarter one will check the file system to determine if sector 2 is listed as “free space” or if it’s already part of a different file. If sector 2 belongs to another file, the algorithm will check sectors 3 and 4. If, for example, sector 3 is listed as “free space”, the smart algorithm will assume that the JPEG file in question was located in sectors 1 and 3.

Of course, both algorithms could be wrong. But you get the idea. The less fragments the file has, the higher probability of successful recovery it will have.

Reducing Fragmentation

How do you reduce fragmentation to an absolute minimum? First and foremost, there are defragmentation tools. Windows has a decent disk optimization and defragmentation tool built into Windows 7. A really good one appeared in Windows 8, and a great defragmentation utility is available with Windows 10. Regardless of which version of Windows you use, setting up Disk Optimization and Defragmentation utility to run periodically on all disks and partitions is a great way to reduce fragmentation.

On busy hard drives with little free space available, defragmentation may not be effective. There’s really no workaround other than ensuring that each and every partition on your hard drive has a certain percentage of its space unused. Generally, keeping free space of about 20 to 40 per cent of the disk is enough to ensure smooth operation of disk defragmentation tools. In addition, keeping as much free space allows Windows to pick larger contiguous chunks every time you’re about to save a new file, preventing fragmentation from occurring in the first place.

So: have a defragmenter run on a schedule and ensure that your disk has plenty of free space, and you’re good. But does this advice apply to solid-state media such as USB flash drives, memory cards and most importantly SSD drives?

Solid-State Drives and Fragmentation

It’s common knowledge that SSD drives should not (or MUST NOT) be defragmented. Indeed, SSD drives do not have moving mechanical parts. There are no moving heads that need to jump over to a new sector and would need time to stabilize. An SSD drive is as fast reading a number of consecutive sectors as it is reading a number of random sectors (well, to a certain degree and providing that the size of a disk sector is the same as and is aligned to the size of the SSD minimum data block).

If that’s not enough of an argument, consider that SSD drives based on NAND flash memory have limited lifespan. Their lifespan is limited by the number of write operations to each flash cell. Moving data around (which is what a disk defragmentation tool does) puts a lot of unnecessary wear onto the flash cells without providing any visible benefit to read or write speeds.

Finally, SSD drives don’t store data in a contiguous or consecutive manner internally. Instead, built-in controllers in SSD drives constantly remap logical and physical data blocks in order to distribute wear equally among the many flash cells.

With no defragmentation available, how do you keep your files in one piece? The only way to avoid fragmentation on SSD drives is keeping a sizeable amount of free space. Make sure that you have at least 30 per cent of free space on your SSD drive. I know, it’s not easy since SSD drives are typically much smaller than their mechanical counterparts. Yet, keeping dedicated free space on an SSD helps the disk perform internal maintenance tasks such as wear leveling and pre-emptive block erase (TRIM and garbage collection) much faster and more efficiently compared to a nearly full unit.